Leaderboard

Popular Content

Showing content with the highest reputation on 04/29/2023 in all areas

-

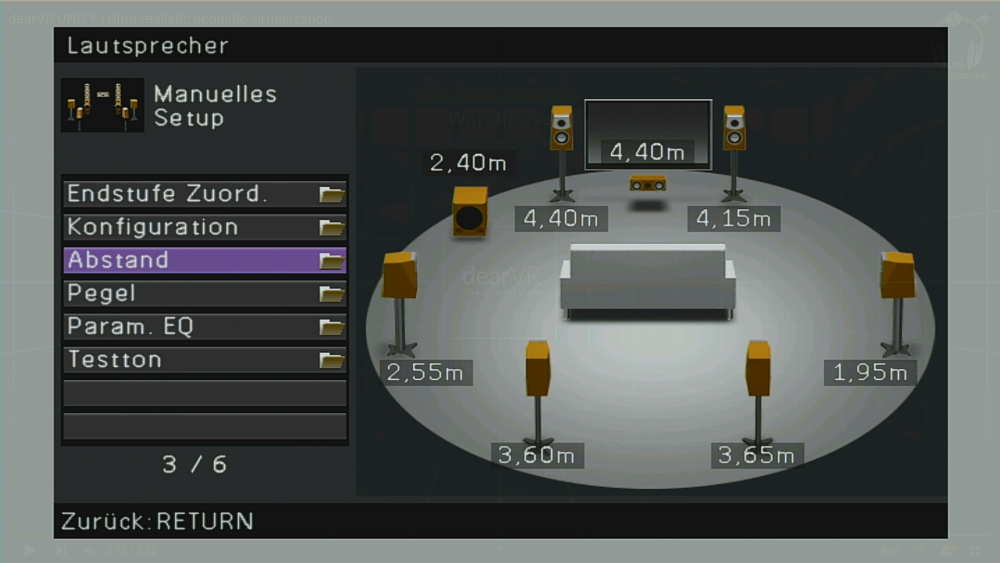

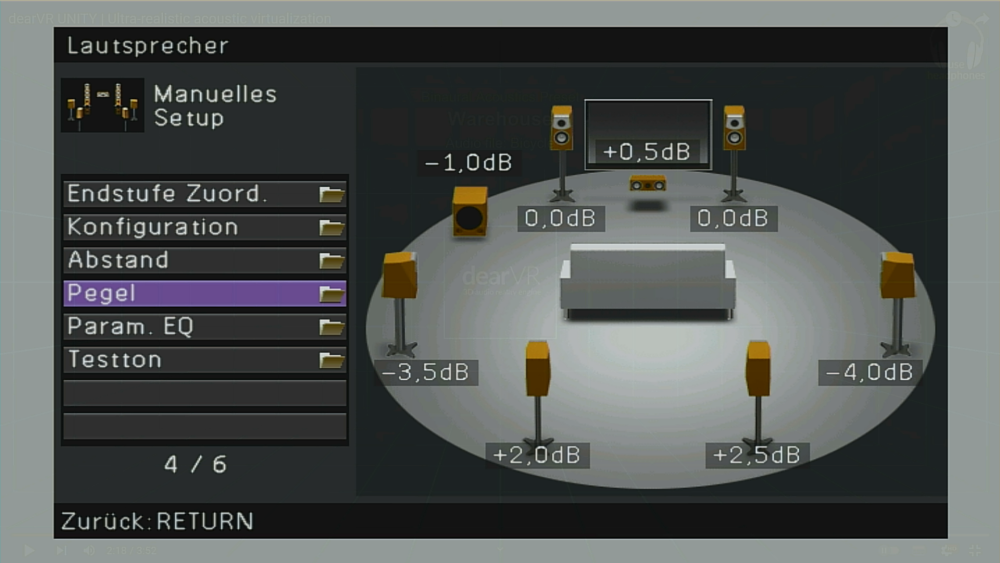

Hey there, as a lucky owner of a 7.2 surround setup, I was wondering how these "VR stereo tweaks" would behave on it. Would it just produce stereo sound on two speakers or also trigger the extra back/surround/etc. channels? My home setup consists of a Yamaha RX-V 773 7.2+2.0 (2 Zones) A/V receiver (=AVR), a Jamo S626 HCS3 set (FL/FR/C/BL/BR), a pair of JBL Control One (SL/SR), and two LFEs -- a Teufel S-6000SW front-firing into the room and a T-4000 down-firing underneath my couch. Below are two captured screenshots from the calibration program of my AVR, which show the speaker distances and levels that have been detected during the automated calibration process (some levels, though, were manually fine-tuned afterwards). Well, here's what I observed: Watched the dearVR promo videos first -- these were mastered for binaural listening through headphones. As I'm pretty sure this will sound brilliant with them, I skipped listening through headphones and went on straight to listen on my room setup; first, I used a "straight" 1:1 speaker mapping, i.e. a stereo signal will only be sent through the FL and FR front speakers (frequency separation by the amplifier to fire the LFEs is INACTIVE). -> L/R panning was fully notable while F/B (front/back) panning was recognizable, but sounded a bit flat (surprise...) on only two speakers. In other words, this is exactly why one should wear headphones for binaural playback... Next was a preset on my A/V receiver called "7.1 stereo", which outputs the L stereo channel on all left speakers of the system (FL/SL/BL), the R stereo channel on all right speakers (FR/SR/BR), provides a 50/50 mix on the center (C) -- probably with a weighted or even calculated volume depending on the L/R intensities -- and performs frequency separation to the LFEs (low frequency emitters / subwoofers), which are adjusted to take over at around 80~100 Hz and below. Now that the "stereo plane" was greatly stretched in depth, the speakers acted much like some sort of headphones! No idea whether or not the AVR does some extra processing since I could follow along with only my ears and no extra equipment, but the "back" portion indeed seemed to travel behind the listener's head and thus resulted in a much more impressive F/B panning depth, just like I would expect to hear it in a binaural playback with headphones. In absence of any indicators or other measuring equipment, I'm unable to tell though whether or not the F/S/B portions of a side receive the same signal (leaving the spacial impression "processing" to only my ears and brain vs. "helping a bit" with F/S/B zone volumes being somehow calculated/separated and differentiated by the AVR). At least, pumping the YouTube audio out to the AVR through a VoiceMeeter virtual audio device showed that only the FL and FR channels received a signal before leaving my computer's HDMI port. Still no extra decoding used on my AVR so far, although it features a Dolby ProLogic-II decoder with various decoder modes (Game, Movie, etc.). Since the "output format" section in the dearVR plugin demo indicated that you're not just limited to binaural output, I think that digital codecs might still be an option for non-headphone/in-room use if the hardware setup provides more than two channels/speakers. On to Master Mi's demo videos, I did the same comparison procedure with "straight" and "7.1 stereo" output modes and ended up with "7.1 stereo" being the more "realistic" one. Output to the AVR was still limited to FL and FR signals, so both the "2-channel surround editor" (left portion of the screen) and the "Independence FX plugin" (right portion of the screen) were both operating on unencoded stereo output. While 2CSE just seemed to work with some sort of position and distance loudness, I'm convinced that Independence heavily utilizes convolution reverb parameters to fake a 3D room impression -- which feels WAY MORE REALISTIC AND NATURAL, such as you wouldn't move a 2D plane in space (2CSE sounds like this) but catch all the changing reverb details of a point audio source in 3D space instead. If I had a choice to make, I'd definitely go with Independence FX. Regarding the deviance in L/R levels which Master Mi was wondering about, my best guess is that -- because all the simulation and calculation stuff seems to boil down to psychoacoustics -- there are beating wave and cancelling effects in the "reverbed" signals that may cause spikes or drops on a particular channel at a discrete point in time. For the "Goldfinger" THPS remix, I was hinted by Master Mi that there should be some "encoded" signal in the stereo stream for the "back" speakers -- but it wasn't. Neither did I recognize differentiated signals from the F/S/B speakers in 7.1 stereo mode, nor did the S/B speakers produce any sound at all in both 1:1 passthrough mode and with an active AVR surround decoding preset. IMHO, there are two possible reasons for this to happen: Either there was no "real" 5.1/7.1/whatever signal (or 2.0 signal with encoded 5.1/etc. information) produced by the DAW, or this information was simply just a victim of conversion and recompression when the video was uploaded to YouTube. In other words, listening to this track on a standard stereo setup, a surround system or just headphones would make no technical difference. However, regarding the psycho-acoustical difference and spacial impression, the clear winner would be headphones -- directly followed by a surround system that's able to perform 7.1 "upmixing" by replicating FL+FR channels to their respective S+B pairs. Still, the signal would just be plain stereo with some (unencoded) psycho-acoustic voodoo magic inside. Listening to the track with that "7.1 stereo" mode active at least "felt" like being in the center of it, even with no "real" surround sound. Instruments could be clearly distinguished and located -- and I had a hard time indeed to figure out that this trumpet was equally present on my FL/SL/BL speakers although seemingly sounding from somewhere between the FL and SL speaker. Aside from that, recognizing Pachelbel's pattern of descending fifths near the end of the theme inevitably put a smile on my hobby organist's face! In summary, I think that mastering the way you did it in the THPS track works pretty well and -- without having the "flat" version as a reference -- can only enhance the listening experience. The good news is that no listener would need special hardware for that since it's just all about tricking the brain with some psychoacoustic modeling and nothing else; the bad news is that you cannot provide "real separated surround" using this method (like encoded stereo formats do). Even with just two speakers and no upmixing, the result still sounds impressively "live and vivid", however I'd still recommend headphones for the full listening experience. Mi, if you could provide the raw audio track stereo export from your DAW as a WAV or FLAC file, this could help to find out whether or not encoded surround information was dropped during the YouTube upload and its subsequent conversion processes. According to the output still sounding convincing, I think this might not have been the case; but this way, we could be able to find out the truth.1 point