-

Posts

8,297 -

Joined

-

Last visited

-

Days Won

17

Content Type

Profiles

Forums

8Tracks

Events

Blogs

Posts posted by zircon

-

-

I'm now taking a class called "Computer & Digital Applications II" here at Drexel, where the work is all going to be done with Pro Tools LE (w/ an Mbox). Since there's a LOT of work to be done, and the computers here aren't all that great (Mac G4s), I'm going to bite the bullet and buy an Mbox 2 w/ Pro Tools so I can do the work on my own computers. This will also kill two birds with one stone, as I've been looking for a portable interface anyway for my laptop, which is a pretty powerful machine anyway. All I would need now is a decent mic and I'd have a great portable recording setup.

Is there anything I should know before I do this? Any particular bundle or set of plugins I should have, outstanding system requirements, something like that?

-

I've been involved with a bunch of collabs. There are a few ways you can do it.

1. If you use the same sequencer, share your free plugins and samples, then send the project file back and forth. Boom, done. That's how my collabs with tefnek go.

2. You all use MIDI for the arrangement until one person (or two) puts samples + synths to the MIDI notes.

3. One person uses MIDI, the other person does the production.

4. You send audio files back and forth (WAV) and one master arranger puts it all together.

-

EMU 0404 is fantastic, I recommend it to everyone in that price range. It works great.

-

Yes, I have the MGS2 soundtrack. I suppose I should have said "too much reliance on direct sampling".

-

Direct rip mixed with default Reason drumloop.. uhh, wow? Did I miss something here?

-

I'm not 100% sure suzume but I believe the original Korg Legacy is a softsynth based on the well known KORG MS-20 - which is I'm sure you know, a monophonic analogue from the 70's. It's also more expensive but that's because you get the controller right? Razz

Original Korg Legacy was MS-20 (w/ controller), Wavestation (digital synth), and Poly Six (another analog synth). As I said. $500 tho!

-

what's the difference between the Korg Legacy Collection and the Digital Edition (besides the MS-20 controller)?

Korg Legacy Collection = MS-20, Poly Six, Wavestation 1.

Korg Legacy Collection: Digital Edition = M1, Wavestation 1.5

Korg Legacy Collection: Analog Edition = MS-20, Poly Six

They discontinued the original KLC and now offer KLC:DE and KLC:AE at reduced prices.

-

Bugger me, only $150?! That's CRAZY! I was looking at the specs on the site - 900 PCM waveforms! I mean that's a LOT of waveforms...not to mention you get Wavestation and an ton of effects thrown in free.

Yeah. It's nuts. Not only do you get ALL the waveforms and patches of the M1 and Wavestation, but EVERY SINGLE expansion card (all like 25+ of them) for both. There are probably a good 3k patches at least.

-

Russell Cox is a pretty experienced composer/arranger with a big music background. In addition he has some very high quality samples; that helps with realism.

Jeremy Soule is an award-winning, world-famous composer. So.. he's got a TON of things that he does that you could not possibly summarize in one post.

-

The Korg M1 is simply brilliant. I got the Korg Legacy Digital Edition (which includes the M1 and Wavestation, perfectly reproduced it's only $150) and browsing through the patches, it just SCREAMS "80s". I listen to tons of smooth jazz from the last decade or two and I CONSTANTLY hear M1 sounds. It's such a great synth. I can't recommend it enough.

-

The sounds itself sound very realistic: 24bit in 48kHz - so this already kicks off a lot of competitors (and leaves only Siedlaczek String Essentials and Vienna Symphonic Libary). Another thing that's different to the competitors is that the VSTi doesn't rely on Convolution or Reverb, but uses a similar technique like FXPansion BFD with their "rooms". You have two tweaking knobs called "Body" and "Room" where you can add additional realism in terms of sound to the samples.

Well, keep in mind QLSO Platinum is also recorded that way as well. VSL is too I'm pretty sure. But the bit depth and sample rate really have no impact on the actual quality of the orchestral samples. There are tons of other more important factors.

-

Reaktor 4 and 5 do not use dongles. They use challenge/response which is no hassle at all.

Also, Image Line has good customer service that I've experienced. Their forums are very helpful. But why would you have FLStudio 4? Get 6.

-

I wrote a guide to terminology on this kind of thing but I guess it's not here any more. Can't blame ya. However, Googling for 'VST definition music' (w/o the ') came up with relevant hits. Might want to try a little harder on the searching next time. I'll explain anyway.

VST is a plugin format developed by Steinberg. It stands for Virtual Studio Technology. There are VST effects (equalizers, compressors, reverbs, distortion units, overdrives, delays, anything else) as well as VST instruments (VSTis - synths, samples, drum machines, what-have-you). It's a universal technology meaning that if your host is VST compatible, it is pretty much guaranteed to load 99% of the VSTs out there. It's also capable of being cross platform. Cubase for Mac runs VSTs as well as Cubase for Windows does (might require some slight alterations but just about all VSTs are compatible that way). It's really an amazing technology.

MOD is a tracker format. Trackers are a kind of music sequencing software, though they are considered by many to be obsolete, and they never really gained popularity with the majority of audio professionals. Rather than using a piano roll or score notation, an odd method of input was used, involving the typing keyboard and an almost mathematical method of defining note start times, pitches, and durations. The sounds themselves came from small samples (eg. WAVs) loaded into the tracker. The resultant MOD (or XM, S3M, etc) files were bundled with all of the note data as well as the samples themselves. The MOD player would then basically assemble the piece on the fly.

-

That may be true, but keep in mind (as I stated numerous times throughout the tutorial) that my advice is generalized and heavily dependent on style. Nonetheless, libraries like Virtual Guitarist 2, Prominy LPC, and Distorted are all extremely realistic.

-

Hey, could you go ahead and link to the production tutorial I wrote on this very forum? It has lots of useful info, imo.

-

As I understand it, you need to match the impedence up with the rest of your signal processing stuff.

-

I think only one guild on my server (Horde, anyway) has killed Huhuran. Our raiding guilds are not in good shape atm, and the gate only opened recently.

-

I've done the first two fights of AQ40 (NOT Sartura). Very good items overall from there, no reason not to do it imo.

-

zircon, AQ gear is NOT I repeat NOT considered tier 3...not by a long shot, especially considering the sets are 5 piece and not 8. As a end game raider many people have complained that blizzard dropped the ball on this instance - sure it has its cool parts, but the risk vs reward is too great for the minimal upgrages - which is considered more of a sidegrade to many (including myself). Sure it has it's standout items, but thats something seen in every instance.

Actually, tier1->tier2 could barely be considered an ugprade if you want to look at it that way. Many Shamans prefer Earthfury over Ten Storms for PVE, for example. I see rogues using their Nightslayer set rather than Bloodfang sometimes as well, and I myself see little reason to spend all my points on Transcendence when I can get UBER upgrades like Pure Blementium Band, Rejuvinating Gem, etc. In comparison, however, the sets in AQ are VERY good. Neither Prophecy nor Transcendence even holds a candle to Oracle, in my opinion.

-

Aside from billing you, Blizzard appears to be incapable of doing anything on time.

-

Tier 3 is Ahn'Qiraj.

-

ReMixing Tips Part 5: Production Values

Ok. This one is going to be a bit long and a little more abstract than my other tutorials. Bear with me as I think there's some useful information here.

First of all, I'd like to present my definition of "production values". To me, production values are the technical aspects of a song - the implementation of it, if you will. A song is really only notes on paper, or a melody in your head, until it is actually executed in some way. You can have a brilliant composition entered into your sequencer, but if you don't pay attention to the production values (things like choosing the right samples, making good recordings of the different parts, choosing proper effects, balancing volume levels, and so on) then your final product will suffer as a result. When it comes to ReMixes, chances are you will be dealing with mainly things that are generated from your computer rather than a lot of live recordings, but I'll try to cover both these areas.

What I intend to go through in this tutorial is how to best approach the production of a song in order to make it sound as good as it possibly can. Keep in mind, once again, that just about everything you are about to read is my own personal opinion on the topic. I've developed a methodology primarily based on lots of practice, thus, what you are getting is a personalized approach. Nonetheless, I've also learned a lot by reading interviews, listening to well-produced music, taking classes on the topic, reading articles, browsing forums like this one, and talking with more experienced musicians. So, I didn't completely make everything up off the top of my head

Anyway, let's begin.

Determining the Style

The style of your mix is the most important thing to keep in mind when you are considering how to produce it. Now, before you say "But I don't want to lock myself in to just one style", the song you have completed (or are in the process of writing) is likely more easy to categorize than you think. Let's for the moment set aside terms like "trance", "hip hop", "metal", or "jazz" for a moment. I am assuming that if your mix falls squarely into one of those categories than you already have a pretty good idea of the general sound you are going for.

Think about HOW you are constructing your song. Is it going to be centered around a vocal line, accompanied by sort instrumental parts? Is it going to be fast-paced, with a driving rhythm? Is it going to be mostly made with synths? Is it going to be a solo instrumental piece? Try to narrow down your idea for what the song should have. For the sake of analysis let's take a look at my ReMix "Calamitous Judgment". I basically knew that I wanted to integrate orchestral instruments (because I think they can add a dramatic flavor to the song), as well as a strong drum groove, and synthesizers. You may not have any other specifics beyond these kinds of things - for example, what KIND of orchestral instruments, or what KIND of drums you're going to be using for the groove - but it's very important to the process! If you honestly have no idea what kind of ReMix you are doing, not even the slightest clue, then spend time in your sequencing switching around between different sounds and narrowing down the style from there.

The reason why this is vital in the production process is because it impacts the rest of your decisions from here on out. People always tend to ask me very general questions such as, "How can I make my drums better?" and I really have trouble responding to that, because every style is different. If you are doing an acoustic rock song, "making your drums better" might involve finding a set of realistic drum samples and writing a very humanized sequence with lots of fills and variations in the patterns. If you're doing an energtic breakbeat song then your biggest problem is probably not the pattern of the drums, but how the samples are processed. Two very different outlooks on the same problem.. all based on what style we're dealing with.

Live vs. Sequenced (MIDI)

Another important production decision is whether you are going to use recordings of musicians playing live, sequenced MIDI sounds, or a combination of the two. As I said earlier, more often than not, ReMixes tend to use parts that are MIDI sequences played through software sounds. However, don't discount the value of having a live instrumental or vocal part. Here are my general tips on the subject.

* Drum parts suffer the least from being sampled/sequenced. From free, high-quality sample sets like NSKit 7 to relatively low-cost libraries such as BFD or Drums from Hell, the overall quality of drum samples is simply stunning. If you have a good rhythmic sense, you can listen to music with grooves that you like, and reproduce them pretty easily in a sequencer.

* Vocals are on the opposite end of the spectrum. Sampled vocals usually come across as cheesy and out of place, outside of "ahh" and "ooh" type choir sounds. You CAN use vocal clips tastefully, but if you want a full sung or spoken line, you're better off writing it yourself and finding someone to perform it.

* Orchestral sounds are difficult to sequence realistically, and high quality sampled ones are sometimes hard to find, but because it is unlikely that you have a full orchestral at your disposal (for a host of reasons), you're better off sticking to samples and learning how to use them well. The exception is..

* Solo orchestral instruments. If you want an expressive violin, cello, or horn line played by a single musician, it is going to be really, really hard to do well with samples. Even the best commercial samples don't sound very good solo. Thus, my recommendation would be to either reconsider whether you want a solo orchestral instrument part at all, or look around on these forums for a performer who can help you out. They're more common than you would think.

* A piano line can go either way. Many ReMixes on this site use sampled piano, including some of the piano-only ReMixes, and some of them are entirely sequenced by mouse (rather than played in on a MIDI keyboard). I would lean towards using a sampled piano rather than going to the bother of recording a real one, as chances are the samples would sound better. However, if you only have free samples, and/or sequencing piano is not really your thing, a better option might be to write out a MIDI or sheet music and give it to a performer with either a good recording setup or a good MIDI recording setup.

* Electric guitars can also go either way, but they tend to sound less realistic more often than not. If you're doing rhythm guitar stuff, or sustained chords (particularly powerchords), you can get away with using samples. Lead guitars can be sampled also but if you really want to do a rock or metal style remix, NOT a "synth metal" one, consider a live performer. If you're going for a more synthy or retro sound, on the other hand, by all means use all the electric guitar samples you want.

* Acoustic guitar, surprisingly, can sound pretty good - even with free samples. Once again, if it's a mix centered around acoustic guitar, you can always find one of many guitarists here who regularly play acoustics, but for most purposes, using samples will suffice.

While these aren't all the instruments available, this should give you a good starting point for how to approach sampled parts vs non-sampled ones.

Balancing Multiple Parts

Aside from solo pieces, you're probably going to have at least three or four different sounds of some kind going on in your mix. Part of the production process is balancing these parts in an intelligent manner. This involves managing the volume, panning, and effects of the individual parts. Please note this is NOT really mastering in the traditional sense, though if you do it right, people will likely say your song sounds well-mastered

Here's where the choice of style comes in. If you're doing an upbeat dance song, you'll want an emphasis on the drums, with even more emphasis on the kick and snare. You can do this by increasing their volume, adding compression and distortion, and EQing up resonant frequencies. For other styles, the drums don't need to play such a big role. For example, for a soothing ambient mix, your drums can just sort of be a "wash" in the texture; add reverb/delay, EQ out any piercing frequencies, reduce their volume, remove the strong bass frequencies of the kick, etc. The exact choices are up to you and without hearing any specific mix, it's impossible for me to give much more detailed advice than that. I can, however, give more generalized advice..

* Whenever the melody is playing, unless you are specifically working in a very ambient style, it should be clear and present. Usually whatever instrument I have playing a lead is more present in the mid to upper frequencies. If it's playing too many low notes, or it's too much of a bassy instrument, it won't stand out. EQ can remedy this. Also, adding a bit of reverb and delay, along with some VERY light distortion, to a synth or lead guitar can make it cut through the mix much better.

* Generally, it's not a bad idea to EQ down the low frequencies of harmony instruments, such as synth pads, strings, choirs, and rhythm guitars. This would be around 20-250 Hz. You should distinctly hear the bass instrument (be it a guitar, synth, whatever) as well as the kick if you have a drumline going. If they're "lost in the mix" then chances are your harmony instruments are muddying things up, and you need to either have them play an octave or more higher, or EQ their low end down. However, don't OVERDO this. You shouldn't be rolling off every instrument, and, especially for synth pads, the character of them can be killed if you EQ their low end down too much. If the low end is muddy, you might also consider simply reducing the volume of the offending instruments rather than EQing. Try it both ways.

* Don't overdo it with sustained instruments, or sustained instruments playing chords. I would say that one of the key problems I hear with muddy mixes is too many sustained notes going on. It's easy to lay down a bunch of different synth pads or string soundfonts playing a bunch of pads, and they might sound great by themselves, but when combined with everything else, it can be hard to clean up the resultant mud. My suggestion is to have no more than one or two sustained pad instruments going on at once (unless you're really adept at processing + EQing). Go easy on the reverb/delay on those instruments as well.

* Reverb and delay on drums is usually a bad idea. I know this is a big generalization, but again, a big problem that I constantly hear is too much reverb on drum parts. Delay, 99% of the time, just makes the drums sound very annoying. If you absolutely must have some reverb on your snare, for example, start with a wet dry ratio of 0:100 (full dry) and then SLOWLY inch it up. If you're on headphones, mix it a little drier than you would prefer, because most people tend to mix in too much reverb on headphones.

* Don't be afraid to make use of volume automation to make parts fit better. You might have a string section playing some opening chords before the main melody comes in of your mix, but even though the strings were the focus before, they might be too loud when mixed with the melody. Use your sequencer's automation function to drop the volume of the strings when the melody comes in. Most listeners will focus on the melody immediately anyway so it won't seem unrealistic or unnatural. If it does, try adjusting the volume BEFORE the melody comes in so that it's a gradual change (a curve).

* For a basic drum groove (with bass drum, snare, hats), usually your bass drum should be loudest, followed by the snare, followed by the hats. Shakers and tambourines should be about the same volume as the hats. Toms can be about the same volume as the snare, and ethnic percussion like congas and bongos can be a little softer than the snare. Overly loud hihats or extra percussive parts can very easily ruin an otherwise nicely-made song. When in doubt stick to the simple kick+snare rhythm.

* When panning, it's usually not a good idea to have anything too far to one side unless it's counterbalanced by a similar sound on the other side. This is a common technique in recording guitars, for example; do one take in mono, pan it "hard" (full) right, do another one that's as close as possible to the first in mono, pan it hard left. The same technique can apply to other sampled instruments also if you want to get some stereo width. This works especially well for synthesizers if you're going for a "fat" sound for genres like trance.

Equalization

This is a broad topic, for sure. But the EQ is one of the most important tools in your arsenal when it comes to shaping sounds. My strongest advice here would be to NOT be afraid to make big changes to EQ settings (though there is an exception which I'll explain in a minute). I routinely boost or cut bands by 10db or more to get the exact sound I want. This is especially true if you're mostly working with synth sounds. Most common synthesizers use subtractive synthesis, and most patches within those synths use lowpass filters, which will be leaving the low frequencies in there. If you're like me and you love sounds lik the 303, you'll be dropping bass frequencies and boosting mids-highs all the time. Carve 'em up!

The exception to this policy is when you're working with vocals, instruments exposed for a solo, and orchestral sounds. With these, you have to be more careful. The human voice has a naturally wide frequency range, which should generally be preserved. Making large EQ cuts or boosts can make the voice sound very unnatural. The same applies to recordings or samples for solo parts. Everyone knows what a piano sounds like, and if you have it exposed in the mix but heavily EQed, it will sound strange. Keep the EQing to a minimum in this context. Orchestral sounds can be like this as well. If you're doing a mix entirely with orchestral sounds, or if you have orchestral sounds exposed at some point in your track, it will hurt their character if you EQ them too much. Subtle adjustments are all you should need. Of course, if you're fitting them in with synths and other non-orchestral instruments you have more leeway when it comes to EQ as they are not the focal point.

Layering

Layering is an often underlooked, but very powerful tool that you can use when producing a mix. If you're designing a groove-centric track but you just can't seem to get the right drum sound with your samples, don't give up. Load multiple samples of the same thing - three kick drums, two snares, two toms - send them through the same effects, and mess with their volumes to find a nice balance between them. You might have a very deep bass drum hit that has lots of power, but it has no presence anywhere else. So, you can then load a very snappy kick with higher frequencies, layer them, and now you have a kick that might fit your mix a lot better.

This doesn't just apply to drums, though. One technique that I use a lot applies to synth basses. Check out the following track and listen to the synth bass that comes in with the main melody.

www.zirconstudios.com/newsong.mp3

This bass sound is actually comprised of two separate patches. One is the deep bass you hear at the very begin of the song. The other is a saw wave patch that is filtered, and then the filter rises (or opens) at the beginning of each note all the way up to the high frequencies. The saw patch has all the low end EQed out but the mid frequencies emphasized. When this patch is layered with the other bass patch, it creates a subtle but cool (in my opinion) effect that makes the bass sound more interesting overall.

Pads are another thing that can benefit greatly from layering. Now, just to be clear, I did write earlier that you don't want too many pad sounds at the same time. To be more specific, what I meant was that you don't want too many distinct pads at the same time. One of my favorite tricks for making atmospheric pad patches is taking a choir, running it through a phaser, reducing the volume, and then layering it with a soft subtractive synth pad, reducing the volume of that as well. Because both the volumes of these two sounds are reduced, they combine to be about the same volume as most single-layer pads, but the final sound is more interesting than any of the individual sounds would be.

There are a ton of things you can do with layering. These few ideas should get you started.

The Big Picture

Sometimes, working on individual parts or sections of a mix still might not reveal overall problems. Take a listen from start to finish of your work. Is the texture or soundscape always the same? If someone moves their Winamp playback cursor at random points in the song, will they be able to tell about at which point of the song they are in? Some might argue this is more of an arrangement or composition issue than anything else, but I think production has a lot to do with it. Simply put, outside of a few cases, you don't want to have the same instruments throughout the mix. Even the most interesting drum grooves can become a bit grating if they play through a whole mix.

Listen to your mix with a really critical ear. There should be some sort of dynamic in what textures you're using. Let's say you have a 45 second introduction where you're bringing in all the different parts. Then, from :45 to 1:45 you're playing the melody with all of those parts. A common mistake is then letting all of those instruments keep going from 1:45 to the end of the song (say, at 3:30). Bad idea. You don't want to fatigue your listener; maybe at 1:45 you can drop out the bass, remove a few layers, and bring in some new instruments to play the melody/harmony. Then, you can drop out a few more at 2:15 before bringing back everything else (along with one or two new tracks) at 2:30, the finale. At 3:10 things die down and you have your outtro. This works a lot better than simply leaving everything at :45 the same and adding new things on top of it.

Of course, depending on the style you are working in, you may not want a typical song structure. That's fine. However, the same rules still apply. You don't want a "wall of sound" assaulting the listener at all times. A major complaint that many people have about modern music in general is that it tends to be completely squashed and maximized to the point where there are no dynamics of any kind. While having "maxed out" volume levels is great at some points during a song, it's just a poor decision to have that going at ALL times. Don't fall into the same trap.

Mastering

Mastering is the very last thing in your process. Honestly, you should not have to do a lot here. Provided you have at least somewhat adhered to the stuff I've been talking about above, this part should just be the finishing touch - the "polish", if you will. There is no one right way to approach mastering, but generally, you want the overall volume of the mix to be at a level where the listener doesn't have to constantly adjust his or her volume control becaues the mix is too loud, too soft, or too variable in dynamics. You also don't want there to be any overly powerful or overly weak frequencies. Usually my mastering chain looks like this; compressor -> EQ -> limiter. Since you might not be entirely clear on what compression and limiting actually do, here's my explanation (quoted from another topic I wrote in).

-

A compressor works like this. You set a THRESHOLD volume level. When the waveform reaches this threshold volume level, it is reduced in volume. You control how MUCH it is reduced in volume with the RATIO control. 1:1 means that for every dB of sound above the threshold, 1 dB of sound will go through - in other words, no effect. 2:1 means that for every 2 dB of sound above the threshold, only 1 dB will be heard. 5:1 means that for every 5 dB of sound above the threshold, 1 dB is output. And so on and so forth. Thus, if you only want to compress something a little bit, you wouldn't use more than 3 or 4:1 compression. Finally, the compressor has a GAIN knob to increase or decrease the overall volume of the sound AFTER the limiting.

Now, a limiter is simply an extreme form of compression. It has a very, very high ratio (sometimes infinite). In other words, as soon as the sound hits the threshold, you could send 50 dB through it and it will only output the threshold level. You can effectively use a compressor as a limiter if you just set the ratio really high.

Other controls to be aware of -

Attack: This is the time it takes for the compression to take place. This is usually measured in ms. If you have an attack of 15ms, that is pretty quick, but some sound will still be uncompressed. So, if you're compressing a snare heavily, you'll hear the *THWAP* right at the beginning and then within 15s the sound will be compressed.

Release: The time it takes for the compression to stop after the sound has gone below the set THRESHOLD level. Usually between 200-800ms. Any longer and it's going to sound funny.

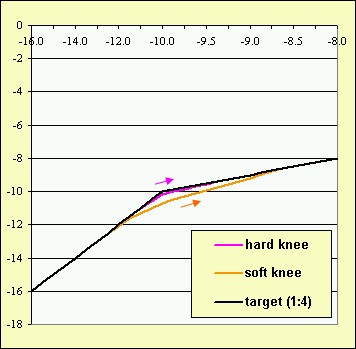

Knee: This isn't on every compressor, but this basically controls how "hard" the compression activates. When the sound hits the threshold, does it limit it very sharply right off the bat, or does it ease into it? Here's a diagram of what this looks like:

-10dB is the threshold, for that image.

So what are practical uses of compression and limiting?

Let's say you have a recording of a guitar. Throughout the recording, you have quieter parts at -28db, and louder parts at -8db. That is a 20dB dynamic range, which is pretty big. So, you set your compressor to a threshold of -16dB, a ratio of 3:1, and gain of +10dB.

So now, all the parts louder than -16dB are being reduced to around -16dB. Your loudest peak before was -8, and the ratio is 3:1. 8/3 = 2.6dB, so your loudest peak after the compression will be -13.4dB or so. So, the quieter parts are still -28dB, but now the louder parts are -13.4dB instead of -8. That means that the dynamic range is now 14.6dB instead of 20.

But wait, didn't this REDUCE the overall loudness? AHA! That's where the GAIN comes in. We set the gain to +10dB, meaning that the quietest parts are now -18dB, and the loudest ones are -3.4dB. This is pretty loud in the grand scheme of things, but not uncommon for a commercial track. Now, think about it - if you had just pumped up the volume by 10dB before, the peak level would have been +2dB, which is clipping. If you had done that then thrown a limiter on it, you'd still have a big dynamic range. So, compression is very useful.

Of course, in an actual song situation you usually aren't constantly measuring the quietest and loudest parts of your song, so you won't have exact numbers. If you DID, you wouldn't even need a limiter because you'd know exactly how to set your compressor so that nothing would clip. Because this is not the case, it's ALWAYS good to put a limiter set to about -0.2dB or so at the very end of your mastering chain to make sure that some really loud spikes didn't get through the compressor. Some limiters also offer an input drive or saturation function that will boost the volume before limiting it, creating a slightly distorted but often pleasant sound.

Finally, there are multiband compressors/limiters. Why would you use these? Think about the following situation.. you have this kickass bassline and kick part. Man, it rules. You gotta keep the volume jacked up so people can hear it. Then you also have a cool vocal line that's sort of floating above everything else. If you're just using a normal compressor, because you have the bass parts boosted, they're going to trigger the compression of the entire waveform. So when the bass part gets dropped a few dB because it's going over the limit, the vocals might not even be close to that threshold yet. Oops. A multiband compressor gets around this problem by separating the audio into THREE bands (low/mid/high), with individual compressor controls for each. This way, you can compress just the bass but not the vocals, or vice versa. Or anything else, really!

By the way, this should also explain why compressor plugin presets are not useful. How COULD they be? The nature of compression is such that you have to tailor it to individual tracks. The only time you should be using presets is if you've designed (or come upon) a preset that works really well for a certain type of sound, and you know how to recreate that sound well. This is the situation with me. I have a preset I created for my compressor and limiter that works extremely well for nearly all the original electronic songs I do. However, I can do this because, well, I've written enough original electronic songs that I tend to use the same production techniques in all of them. Thus the approach to mastering is going to be the same.

Here's an MP3 example of compression in action. The first loop (played for 2 bars) is uncompressed. The next one has a threshold of -15dB, 4:1 ratio, and a little bit of gain. Same peaks as the first one, but you can hear it sounds a bit louder overall. The third one is pretty extreme, with something like a -30dB threshold and 10:1 ratio with lots of gain. Again, not TECHNICALLY louder than the first too but it certainly sounds that way.

http://www.zirconstudios.com/Compression.mp3

--

While it's your choice whether or not to use compression (and tweaking a compressor is really the only way to find out whether you think it would work or not, though in my experience it usually does), limiting is essential as the final step in the mastering chain. Set the output to -0.2db. The attack (if any) should be 0ms. The release is usually somewhere between 200-800ms.. that's up to you to tweak, but 200-300 is a safe number. This will ensure that you have no clipping anywhere in your mix.

In regards to the equalization, you should not be doing anything major. If when listening to your final mix you feel the need to drop any frequencies more than 3db or so, you need to go back and edit individual parts again. You did something wrong in the balance phase of the process. If you were careful earlier, the most you'll have to do here is perhaps shaving off a little bit of bass, or emphasizing the highs a bit.

Encoding

Not really part of the production process per-se, but if you plan on making your song available to other people, you'll probably be converting it to MP3. I think you can't really go wrong with the RazorLame software, which operates off the excellent LAME mp3 encoding engine. There's no reason not to use VBR (variable bitrate) which basically maximizes quality while minimizing size. VBR is the only way that Disco Dan's "Triforce Majeure" mix from Zelda 3 sounds so good, despite fitting under our size limit and being a very rich and long mix. There's no reason to go above 224 or 256kbps max VBR, and some would argue even those numbers are high. In terms of minimum VBR, you can go all the way down to 32kbps and not really hear any hit in quality. However if you have a piece with a huge dynamic range, like an expressive piano solo, or an orchestral piece, you might want to bump that minimum up a bit.

For more detailed information on encoding, I'm probably not the person to ask. Usually, if I'm trying to really maximize the quality of a mix while keeping it under the 6mb limit (I did the encoding for Wingless' last two remixes) I pretty much just tweak different settings gradually until I get it as close to 6mb as possible.

Conclusion

Production, like any aspect of music making (or just about anything in life), is primarily about practice. The tips I've listed above will hopefully be of some help to you when refining your own technique, but they are compliments to practice, not substitutes. So, when producing a song, try to take breaks to keep yourself objective. If you've been working on something all day, get some sleep and approach it the next day with new ears. Bounce it off some non-musical friends and see if they notice anything you missed. Listen to the mix on different sets of speakers or headphones. Above all things, listen to a work in progress all the time! Some might disagree with me on this, but if you keep listening to the same track while producing it, and you try to stay as objective as possible, you can REALLY fine tune it and make it shine. It's obsessive, but it works for a whole lot of people.

If all else fails and you really don't know how to achieve a particular sound, listen to a song which SOUNDS really good to you, and try to break down what gives it that nice sound. I know many artists who do this, and while it seems a bit counter-intuitive if you're trying to create your own sound, it's much more helpful than you'd think. The understanding of what leads to a given sound or feel is powerful in that you can add that to your existing repertoire of techniques and even combine it with them.

In closing, feel free to post here with comments, questions, additions, criticisms, or whatever else you want.

Now go make some music!

-

What do you mean by sync problems, exactly?

Like I will have 3 MIDI tracks, and 2 audio tracks, and everything is fine and dandy. Then I add one more audio track, and now one of the first 2 is lagging. My WIP I just posted (Rockin' Four-Two) has sync problems all over the place as a result, whereas if I mute all but one audio track, all of them are actually dead on.

-Ray

Wait, so they're lagging in relation to eachother? Are you sure they're lined up properly?

-

Should this have been a direct post by OCR standards? Nah, at least I don't think so, with as many problems it has, to my ears. But eh, then again, would it have been posted any other way? I'm not a judge, but I've seen songs that had less flaws turned down flat, in comparison...Then again, I'm willing to bet that this would have gotten a resubmit...Because it has many good things going for it, and many "easily" fixed problems.

Easily fixed problems don't mean a mix will be rejected. I personally have accepted plenty of mixes with easily fixable problems. Objectively speaking when I designed the arrangement here I did it in such a way to incorporate a good level of interpretation, variation, and original material. If this was put through the panel the arrangement factor would be fine every time. The vocals would be the only thing, if ANYTHING, that people would have a problem with, but again I have a pretty good grasp of vocal standards and this passes the bar in that category.

But by "easily", that could have required you meeting again to change anything, and then, what would the fun be? Or Jill could have just used her mic, re-recorded the vocals at home, and shonen, also D-lux could have redone that...rap thing... And it would have turned into an internet-collab...Instead of the one or two takes this got at 5am (under my impression)...All in all, this is dedicated to that e-meeting...etc. And I guess the "spirit" of the thing matters here, especially to the artists, otherwise, under any other circumstances, this mix wouldn't be so much of a big deal...And ironically enough, it probably wouldn't have suffered so much production-wise either. Anyway, that's my take on it.Let me be clear for a minute. As I posted a few times, we didn't finish this entirely in one night. Most of it was sketched out and conceived in one night. The lyrics were all written out, the basics of the arrangements were set down, the guitar rhythms were set, and so forth and so on. But Jill's vocals were recorded on her setup at home, Taucer's guitars, Shonen's part.. those were sent to me online a few days later where I integrated them with the rest of the mix on my own computer and polished it from there. DLux's part was the one recorded on my mic, and as you can hear the recording quality is significantly lower. If everything else was done on that, you'd be able to hear it!

DJP passes whatever he wants to pass, and he understood what kind of song this was, I am guessing...I have no problem with that...But to the artists, don't expect everyone else in the community to understand, or even if they understand, to appreciate this song the way you do. It's all just opinion anyways.Absolutely! Your feedback is much appreciated. Just clarifying a few things is all.

How do you make your collabs?

in Music Composition & Production

Posted

Honestly you're making it out to be more complicated than it is. I've done successful collabs so I know that the methods I proposed do work.

Presumably you are collabing with someone who's not an asshole. It's your own dumb fault if you chose to work with someone who didn't want to pull his weight. Also, the two MIDI-related methods I described are not the same. In the the first method, both people are exchanging MIDI files and working on the arrangement together. Then, whoever has the better production skills puts sounds to the MIDI. In the other example, the work is split evenly down the middle. One person does ALL the arrangement, one person does ALL of the production. This is the kind of thing I am doing with GeoffreyTaucer. I enjoy it.

In terms of THIS WEBSITE, most people use FLStudio or Reason. Other sequencers are simply in the minority. Thus if you're giving general advice it makes no sense to address it to the minority. This may be the case with Cubase, but in terms of general collab methodology, working with the same sequencer is a commonly accepted practice among people not only in this community but in the professional world.

Let me just say that I had no problem with this. In the Lover Reef project, I received finals that had slight timing issues. If you have any idea as to what you're doing, you can make micro adjustments, taking the waveform and chopping it up in between transients and re-positioning the new chunks to the beat. This is how I processed ALL of the vocals and instrumentals in the aforementioned remix.

The most efficient method is to simply send an MP3 of the rendered track to someone, that person loads the MP3 into their sequencer, then records or sequences their new part over that. Then, they export the WAV of ONLY their part and sends it right back to you. There is 0 possibility for error here unless it is a live performance, in which case, as I said, micro-adjustments are all you need to fix any minor problems.

In addition, while there is no particular *harm* in discussing a sound that you're going for, you're placing a bit too much emphasis on the engineering aspect here. In a collab, especially in a community like this where everything is really just done for fun, you don't need strict standards on the recorded material that you are getting (or the sequenced material). I know for a fact that you personally spend an exorbitant amount of time tweaking things. I think that is a bad idea and I discourage other people from doing that. If you get a less than perfect vocal recording, so what? Deal with it! Things don't need to be perfect if the goal is an enjoyable song. It's more important to capture a soulful performance at the right time. No one here is trying to get a Grammy.