-

Posts

5,797 -

Joined

-

Last visited

-

Days Won

31

Content Type

Profiles

Forums

8Tracks

Articles

Events

Blogs

Everything posted by Nabeel Ansari

-

Not really fair to compare a string library to a full ensemble sketching library Albion is a pretty amazing sample library; it stands by itself really well and can create an emotive full spread. It's not as agile with line-writing as a full string library, but again, not really a fair comparison. What you get with Albion is tone and ease of mixing. That being said, I think you're greatly overusing the word "need". You "want" gear and you "want" to get started on a music journey. You don't "need" to. "Need" is stuff like, your life circumstances will severely diminish if you don't get it. Unless you've somehow bet your life finances on a music career you haven't even started yet, this is probably not the case.

-

Volume Volume Volume - relative and absolute

Nabeel Ansari replied to BloomingLate's topic in Music Composition & Production

Here's some answers in more plain english: 1. Clipping sounds like crackling distortion. Just keep raising the volume and eventually you'll hear it. That's the rule in computers; when it goes past 0 dB, it will clip. Because the computer can't process stuff that is louder. 2. dB is just a measure of amplitude (loudness). Hz is completely different. When you have a pure tone, it's a wave moving at some number of times per second. 1 Hz is one pulse per second, 2 Hz is 2 per second, etc. The range of human hearing is 20 Hz to 20,000 Hz (that's generous, it's different per person. My ears stop at 17,000.) http://www.szynalski.com/tone-generator/ Go to this site and you will quickly understand what Hz means in the context of what you hear. Low Hz is bass, high Hz is treble. Raise and lower the Hz on that website and you'll see as you go from the lowest number to the highest number, you're going from lowest pitch to highest pitch. 3. Normalize just means it finds the loudest point in your song and raises the entire song equally all at once so that THAT particular loud point is 0 dB. Usually normalize in programs lets you tell it what to normalize to (-3 dB, -1 dB, 0dB, etc.). 4. The overall loudness of your song is whatever is passing through the master channel. That's what you're hearing, and the master channel loudness meter tells you what it is. -

Volume Volume Volume - relative and absolute

Nabeel Ansari replied to BloomingLate's topic in Music Composition & Production

Ah I see. In that case, I get what you're saying. Definitely want to never touch the internal volume. Mine's always at 100%, but that's because I have separate volume knobs for my headphones and speakers. I have 0 consistency in what my (interface physical output) volume is set. The output knob to my monitors will move randomly at least 10 times a day just listening to random stuff (like other music, YT vids, etc.) But when I'm making music, and doing a final loudness check on my stuff wrt perceived loudness, I definitely pull up something professionally done and released on iTunes to reset it back to where I'm comfortable listening to mastered music. At this point, I can just visually see where that knob position should be that I hear "powerful and comfortable" for professionally done music. It's around 30% on the knob dial for speakers, and 100% for the headphones (with the Sonarworks calibration giving -7.9 dB, and having the 250 ohm DT 880's, my interface really needs to try hard) And then I just take that sort of familiar volume comfort zone and then mix my desired perceived loudness there; this way, I know exactly how my music is going to contrast with other albums people might be streaming alongside me, because I picked that volume level while listening to other stuff. And I know that other stuff is at 0 dB, so I put mine to 0 dB too. If they're both 0 dB, and they both sound just as loud, then they are just as loud, absolutely, on all devices. Timaeus hit the nail on the head. Before you mix anything you should set your listening volume while listening to a reference track, preferably something professionally done and commercially released. -

Volume Volume Volume - relative and absolute

Nabeel Ansari replied to BloomingLate's topic in Music Composition & Production

@timaeus222 I think you're going in a perceived loudness direction which is a little more advanced than the kind of issue BloomingLate has. The issue here is simply that OP doesn't understand the dB scale, which is the "absolute loudness" measurement he's looking for. BloomingLate, you can raise the master track of your song up to 0 dB FS, which is the digital limit for clipping. You should always mix to 0 dB because that's the standard for mastering. 0 dB is marked at the top of the loudness meter in your DAW software. The dB number has absolutely no bearing on the perceived sound energy without a consideration of dynamic range (you can still have soft music where its loudest peak is 0 dB). If you don't like a high amount of sound energy, mix to 0 dB but avoid any master compression or limiting so that nothing goes over. In other words, avoiding 0 dB doesn't mean you're avoiding making the music sound too loud, you're just annoyingly making people raise their volume knobs relative to all the other music they listen to. To explain your own example, trance music isn't loud because it's at 0 dB (the "red" part), it's loud because it's very compressed with little dynamic range, so the sound energy over time is packed and you feel it harder in your ears. For a practical solution to your problem, you can also render your mix so it never hits 0 dB (to truly avoid the need any master compression and limiting) and then just Normalize it. This will make your music at least hit the same peak that other music does, and shouldn't require the listeners to vastly pump up the volume to hear. However, I would wager that without any compression whatsoever, people will still be raising their volumes. Most music is compressed in some form nowadays, and I can't remember the last album I saw with full dynamic range (besides classical music, which is impossible to listen to in environments like the car because of said dynamic range). As for the volume levels of your devices (headphones, laptops, stereo), none of that stuff matters at all. If someone's listening device is quiet and they need to dial it to 70% to hear anything, that's their problem. If your music is mixed to the same standards as everyone else, then it will sound the same on their system as any other music they listen to, and that's what you shoot for. This is the 0 dB thing I was talking about before. How loud it sounds is a matter of handling dynamic range using stuff like compression, and that's what Timaeus is talking about with referencing a track to match the perceived loudness. That stuff is its own rabbithole and takes a lot of learning and experience to understand how to do properly. tl;dr If you mix it so that you go up to but never cross 0 dB, you will never blow out speakers/headphones and your signal won't distort. This is one of those things that should just be automatic for every piece of music you create. -

Advice on Channeling Creativity from Anxiety

Nabeel Ansari replied to DarkEco's topic in General Discussion

The most important thing that prolific artists and composers will tell you is that this stuff becomes incredibly easy if you just do it all the time and consistently. Anxiety about being creative is self-fulfilling, since the issues you talk about (not having ideas, not knowing what to do) come from being unpracticed. Do you ask someone to run a 5-minute mile if they've been a couch potato for the last 3 years? Being a creative is like being an athlete. If you don't keep those muscles in shape, they'll never, ever work when you ask them to. Just make stuff, and stop worrying about if it's bad. Bad art can improve, non-existent art can not. And remember: -

FL Studio - Suddenly stopped playing in mixer

Nabeel Ansari replied to music2go's topic in Music Composition & Production

What's the issue pertaining to Shreddage? -

I want to build you a computer

Nabeel Ansari replied to prophetik music's topic in General Discussion

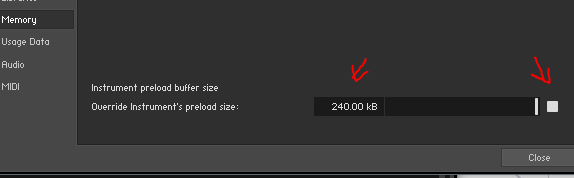

Does that happen in standalone Kontakt with those patches as well? If it only happens when your project is huge then it's just a case that a WD Black can't really stream a metric ton of sample data since Kontakt libraries are usually configured for DFD (direct from disk). If you have extra unused RAM, check if it improves when you manually override the preload buffer and set it to max size: Places less strain on the hard drive. If it happens in standalone, then it's probably either drive failure or Windows is messing with your disk usage (make sure real-time protection is turned off, and your computer isn't running backups or virus scans or w/e). Either way, moving to SSD is what you want to do for sample streaming, which is pretty much expected in Kontakt libraries nowadays. -

First off, for a career primer on what all this stuff is like, there's really nothing more accurate and comprehensive than the GameSoundCon annual survey. Here's the report from 2017: https://www.gamesoundcon.com/single-post/2017/10/02/GameSoundCon-Game-Audio-Industry-Survey-2017 Networking is absolutely the most important thing you can do as a composer (really, as a person who seeks to stay ahead in any industry). As shown in the report, little over half of all reported gigs were recruitments and referrals. I've been presented a lot of opportunities by knowing a lot of people who have things going on. For example, a composer/business mentor I met years ago contacted me recently to tell me he (and even his assistant) is starting to get too busy for the gig load that's coming into his company, and that he wants to rope me in to help out with that stuff and sees my skillset as up to the task. As for groups and places, you really want to join the Game Audio Network Guild, and start attending GDC if you can afford it. They have cheaper Expo passes that don't give you access to audio panels, but don't at all inhibit your ability to network or attend the guild mixers/events. GDC is really the most important networking event for any sub-industry of the game industry. Getting to know the faces of all the people who you're in the industry with is really essential. The Guild itself gives a lot of resources, like discounts, sure, but also things like contract templates for your gigs. That being said, it's important to learn how to network. Do not go around handing business cards and expecting that to do anything, and also don't be that person walking around asking if anyone's hiring. Networking is about building actual relationships with people, colleagues, friends. I essentially go to GDC just to hang out with people. When you meet someone really cool and fun to talk to, it's very memorable. When you meet someone who hands you a card and is like "I write and produce music", it's a massive yawn. Literally everyone else in the room might do what you do, and half of them might do it better. Think of it like this, it's like creating a spider web. You can make a lot of connections and build a really huge web... but it's just going to rip and fall apart when it tries to catch something if all those connections are weak. Even if you have a small web of strong connections (closer to what my situation is like), that web will hold steadfast when something runs into it. The ideal is, over the years, starting with a small one and building it up to a large one, but always keeping it going strong. Lastly, OCR is not a great place to get advice about this stuff. There's not a whole lot of professionals here who are actively in the industry who hang out on these forums. I highly recommend joining "Business Skills for Composers" on Facebook. It's a group of a few thousand people and a lot of very successful guys who like to mentor hang out there. The advice is really invaluable, and the amount of existing material that covers topics like how much to charge, how to network, how to pitch, managing your rights to your work, maximizing your opportunities (whether it be $$$ compensation or planting seeds for more opportunities), etc.are way more than enough to chew on for the first year of career development. It's a very focused group and heavily moderated, so all the content is on point and they make sure all the discussions are productive. There's really no first step I'd recommend more than joining BSFC and reading the discussions, and asking your own questions. Lots of people employ the advice they get there to great effect (for example, people don't realize they can often raise their rates a lot, and companies will accept the price). Here's a great guide that @zircon sent me when I was younger and had no idea what any of this was about. http://tinysubversions.com/2005/10/effective-networking-in-the-games-industry-introduction/ As a final note... from the perspective of networking, your strongest asset is you, yourself. Your personality, your work ethic. When people are looking to hire, spread the word, refer gigs, w/e it is, they don't contact people who are flaky, people who are assholes, people who are unprofessional or not confident, etc. Being a composer is like being a salesperson in some respects. It's not just your product, and it's not just going around posting ads; it's very much about becoming someone people trust and like working with so that these relationship will keep bearing more fruit. You need to develop a personality that people will look at and say "i really like this guy/I really like working with this guy."

-

NEW ALBUM - COLOURS by PRYZM (Electro Organic Prog)

Nabeel Ansari replied to Nabeel Ansari's topic in General Discussion

Thanks so much! I was trying to bring the influence and elements into a context that was more familiar and approachable for people. -

I think if you had this idea years ago when this community was musically active (instead of just socially active), it would've caught on. Now, the forums are dead and submitted OCR remixes are from people who don't really necessarily even really hang out. I think also with the existence of Materia Collective, the idea of a supportive community of available live musicians is kind of already filled and there's no need to try and get that started on OCR. Furthermore, the reason Materia attracts musicians is because they do licensed, paid work. Their albums are commercial, paid albums with digital distribution to stores like iTunes, Spotify, Pandora, etc. OCR remixes are only ever free, never distributed, and the copyright issue is kind of always a grey area. I've wanted to collaborate with some Materia musicians in the past and they've always sort of shyed away from it, hinting at the fact that making music for OCR doesn't really seem like a good use of their time because it won't net them anything. If I wanted to invest in getting good performances in my music, the OCR submission would be a secondary concern, not the primary. Our few paid albums would be the exception, like Megaman 25th Anniversary, Crypt of the Necrodancer, and the Tangledeep Arranged album (not sure if this one is "OCR", but most of the artists are OCR anyway so I included it).

-

Yeah you're right, I've done that plenty in the 2 years i haven't said a word on this forum

-

I think you're really overreacting and not at all responding to what John is actually frustrated about. He's talking about the decline of music education curriculum in schools, not the moral consequences of pop music being popular. It also sounds like you're bringing a lot of "everything is elitist bullshit" baggage into the conversation when it's completely unwarranted. People don't deserve to be antagonized just because they think artistic standards are valid, and you're kinda framing that whole camp as a sort of angry mob when they're not. I mean, unless your frame of reference for "people who care about art" is something like... youtube commenters.

-

First off, like I said, 32 Ohm has a different response than the 250 Ohm, so off the bat you're not getting a truly accurate experience here; in fact, every pair of headphones is different. You see the blue smudge surrounding the blue line that's the response of the headphones? That's the deviation of just 250 Ohm DT 880's. You'd have to send your headphones to Sonarworks for them to measure it to get an exact calibration (I find the avg. is good enough since I have other ways to reference, like monitors and another dope-sounding pair of headphones). It sounds like you're just used to your headphones giving you very shrill highs. Listen to a lot of different music on the adjustment and your ears will get a better sense. You can not switch a frequency response profile in just a matter of minutes and not expect to be disoriented. I wouldn't really reference your own music at all, in fact, you should treat this as an opportunity to see issues in your past mixes. It's like doing a digital painting on a crappy cheap monitor and looking at it on a top-dollar calibrated IPS... all the colors are going to look hella wrong, nothing like what you wanted. Just listening to your Time Traveler track on my monitors, which have no calibration shenanigans at all, the treble does sound pretty weak. So I think a lot of the issues you're hearing are the mix quality, not the calibration screwing up. Also, just remember to turn off calibration plugin completely, using DAW Bypass, before rendering the music for other people to listen to. Calibration is for your ears only. I can't stand the sound of uncalibrated DT 880's anymore, since there's so much low end missing and the high end sounds like it's shrieking compared to a natural response (like on my monitors). If I toggle the calibration OFF, I'm like "oh god, the mix died, and its ghost is trying to hunt me down and kill me". That being said, I never keep the compensation at 100%. There's a dry/wet knob right in the program, and I usually do around 80%. I get a little bit of the sizzle back (personal taste), and mostly keep the newfound bass response, and the low and high mids are about even. It's a good compromise. As for volume, because it's EQing your final signal, it has to reduce volume, essentially equivalent to how much is being boosted across the spectrum, otherwise it would clip. You can toggle off "Avoid Clipping" right under the output meter, but I wouldn't advise this, because... why clip? The idea is simply you just set a new monitor level for your whole system once you're running calibration on everything. Lastly, yes you can EQ it yourself, but use a linear phase EQ or it'll screw up the sound a lot. Also, you know... you could just not, Andrew Aversa (zircon) has mixed pretty much exclusively on uncalibrated DT 880's for like a decade now. His mixes are well-balanced because he just knows what a good mix sounds like through them. Personally, the uncalibrated DT 880's pretty much defined what people told me they didn't like about my mixes; my low mids were scooped out, the bass was too strong, and the high mids are harsh. Surprise, all of that is compensation for the bad response from the headphones. I like an even, full response because I think that's a good way to listen to music, and I am hearing what studio engineers hear when they mix all my favorite records, and it's a closer response to proper studio monitors in a good treated room.

-

NEW ALBUM - COLOURS by PRYZM (Electro Organic Prog)

Nabeel Ansari replied to Nabeel Ansari's topic in General Discussion

You’re the first person to call out Colour of Time, which makes me really happy because imo it’s my favorite piece on the album as well. -

I didn't know "Music Business" was code for PPR.

-

A soundcard does not free CPU load from your projects, most of the time. You have to buy more expensive soundcards with on-board DSP (basically VST's that run only on the soundcard processor) and then use that on-board DSP instead of any other vst's to free CPU load. A soundcard also doesn't improve sound quality that much if you're not experienced. It will, however, improve noise level by a lot, and using proper ASIO drivers made for the soundcard will make your projects able to handle more instruments and effects, CPU-wise. The choice of headphones (DT 880) and DAW (FL Studio) doesn't matter at all when choosing an audio interface. As for the 32 Ohm, in my experience they'd get much noisier much more quickly. You have to drive more level into the 250 Ohm, but the noise level is way better. This is testing both resistance levels of headphones in the same exact headphone jack on my interface. The 250 Ohm also has better frequency response, but you really want to pick up Sonarworks Reference 4 (headphone edition) so that you can correct the frequency response and get all that bass back. DT 880's have pretty weak bass and very shrill treble, Reference will flatten it out nicely.

-

NEW ALBUM - COLOURS by PRYZM (Electro Organic Prog)

Nabeel Ansari replied to Nabeel Ansari's topic in General Discussion

Thanks guys! Means a lot from people making music before I ever even came here. -

Hey guys, After 9 years being on OverClocked ReMix, basically starting to my musical adventure here on the forums (first as Neblix, moving on from that name to my real name, then starting a new brand), I'm proud to finally release my first album of original music, COLOURS. Bandcamp: https://pryzmusic.bandcamp.com/album/colours CD Baby: https://store.cdbaby.com/cd/pryzm OverClocked Records: https://overclockedrecords.com/release/colours/ Will also be coming to all major storefronts in the coming week, including streaming platforms! It's already available for free on YouTube and SoundCloud at low-quality streaming. COLOURS is my debut album wrapping up my early young adult experience and entering the world as an independent creative and engineer. With this album is the introduction of the PRYZM, my metaphor for the assimilation of different influences across the spectrum of music. Music can be expressed in many different ways, and my motivation to compose is to learn all of these different expressions and merge them into my identity as a composer. You can also grab my new social media pages to stay up to date with new music! OFFICIAL WEBSITE: https://pryzmusic.com/ FACEBOOK: https://facebook.com/pryzmusic TWITTER: https://twitter.com/pryzmusic YOUTUBE: https://www.youtube.com/channel/UCkomW-RLYNvAzhGs0dVofhA SOUNDCLOUD: https://soundcloud.com/pryzmusic TWITCH.TV: https://twitch.tv/pryzmusic

-

I think it's more like they have a good point that MS Paint style left-to-pencil-right-to-erase is a pretty good way to do it, but having lived on both sides for a good amount of years, and having been evangelical specifically about FL's piano roll, it's really not the dealbreaker they make it out to be. I think people in general underestimate their own ability to adjust to different workflows.

-

Most DAW's have parametric EQ's with visualizers. Patterns are nice but constricting when they're the only option; S1's implementation of patterns are better because they're optional. (the rest of this point is just an opinion, can skip) Patterns only work for all types of music after a few years of learning how to work around them to write what you want (in other words, not outright prohibitive, but an annoying philosophical hindrance). They also leave 0 parity with the arrangement view. In other DAW's, when you create a MIDI clip in the timeline, it exists there in the timeline; when you automate CC in the piano roll, it's the same automation displayed in the timeline. In the piano roll you can just see everything on the track across the timeline, not just the clip you have selected. This allows for a more comprehensive and holistic view of your music instead of thinking of it as a complex combination of independent objects, which rarely works unless you're writing electronic music. When working with patterns, you're constantly doing mental bookkeeping to remember how the pattern object fits into the rest of the music, because the piano roll sure as hell isn't going to tell you. The little mini-preview they added helps, but it's still a flawed design. Every single DAW has a piano roll. Couple other DAWs' ghost note implementations are vastly superior to FL in that they don't require you to funnel all your part-writing into a single pattern object (making it useless for arrangement view, since it's just a single-track jambled mess). Additionally, a DAW like S1 actually lets you edit the multiple midi channels at the same time, instead of only being able to view them. This is pretty crucial when transposing or altering chords across several patches at once. Literally every DAW has a duplicate bar function. In S1 you just highlight notes and hit D. DAW's like Reason also have mini playlist views. FL is like the last DAW to have allowed good time sig changes. Am just responding to "most of which I don't see in other DAW's", it's more like the other way around, most DAW's have most of these things except some key differences like lifetime free updates, and then patterns, which I don't think is really advantageous at all to anyone except people who have it as their first music production experience. And even then, not so much, did it for 8 years and then switched off and never miss it. There's nothing patterns can do that other DAW's can't. There's other stuff I don't miss about FL, like the amount of clicks it takes to do stuff. Setting up multi-midi channel samplers is freaking horrifying. In S1 it takes about 4 or 5 seconds to get 16 Kontakt MIDI channels and 16 corresponding mixer outputs. Also, lack of native MIDI support in that area, having to link your controller's knobs to manually configured CC knobs inside the MIDI out channel just to get stuff like modwheel and sustain pedal? Ridiculous. Also, applying FX to audio clips is something lightyears faster in S1, you can just put FX on the clip itself, and then print in place (good for sound design electro segments). In FL you have to assign the audio clip a mixer channel. There's also that horrid behavior where you can slice stuff but they're still part of that one "clip" object instead of splitting into independent data. Audio editing in general is bad in FL because everything is abstracted into clip containers, and doing simple volume crossfades between two overlapping clips is a whole ordeal instead of hitting something like "X" in other DAW's. Don't even get me started on "automation clips". It just seems like everything I'd want to do in a DAW takes extra effort to pull off in FL Studio. I get that people really like it and are comfortable with it, but you can work with anything to create great music. The usage and the users are not a testament to good design or learning curve. So I'll never recommend FL to anyone, but I'd never be bothered if I had to work with someone who used it, since I'd trust them to know how to coerce the spaghetti to get a good result.

-

Nowadays small details of response comparison between studio monitors are moot and needless effort to shop around for. If you pick up Sonarworks Reference 4 w/ their mic, then once you set up your monitors in the right configuration, calibrate the Reference 4 profile. The mic will detect differences between expected signal sound and what both your monitors and room are giving you, and apply a counter filter that cancels the differences out. You do have to manually set it to linear phase, which adds some latency to your path, but having that un-affected phase response is crucial. It gets you way better results than agonizing over configuration settings. The important thing is that you just buy a good quality set with a decent response and then have your room at least minimally treated so that Reference doesn't have to do an unnatural amount of work to fix it. Reference mostly gets a ton of praise all around, but also a small handful of disappointed customers, so you can shop around for other companies that do the same thing, there are multiple ones. https://www.sweetwater.com/store/detail/Ref4StuMicBun--sonarworks-reference-4-studio-with-mic That being said, your mileage may vary if you're throwing a subwoofer in there too, since it needs to calculate L/R independently. Honestly like... a subwoofer isn't necessary, and I see of accomplished (home) engineers leaving it out of their setups. Getting something above 5" drivers for your monitors will already guarantee you a good sounding bass response. Getting a sub can actually be detrimental if your room isn't right for it, or you aren't prepared to potentially do a lot of interior design work to make it work.