-

Posts

5,797 -

Joined

-

Last visited

-

Days Won

31

Profile Information

-

Real Name

Nabeel Ansari

-

Location

Philadelphia, PA

-

Occupation

Impact Soundworks Developer, Video Game Composer

-

Interests

Music, Mathematics, Physics, Video Games, Storytelling

Artist Settings

-

Collaboration Status

1. Not Interested or Available

-

Software - Digital Audio Workstation (DAW)

Studio One

-

Software - Preferred Plugins/Libraries

Spitfire, Orchestral Tools, Impact Soundworks, Embertone, u-he, Xfer Records, Spectrasonics

-

Composition & Production Skills

Arrangement & Orchestration

Drum Programming

Lyrics

Mixing & Mastering

Recording Facilities

Synthesis & Sound Design -

Instrumental & Vocal Skills (List)

Piano

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Nabeel Ansari's Achievements

Newbie (1/14)

-

Rare

Recent Badges

-

Geoffrey Taucer reacted to a post in a topic:

Faithful high definition studio headphones with flat frequency response

Geoffrey Taucer reacted to a post in a topic:

Faithful high definition studio headphones with flat frequency response

-

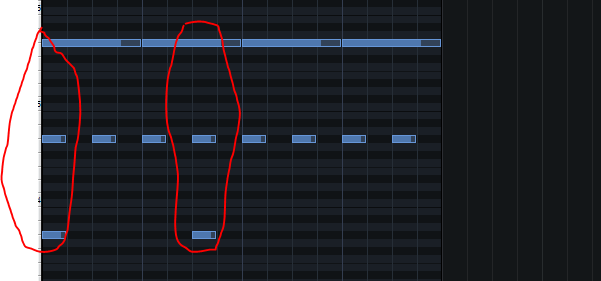

Pretty much, I can't hear anything it does the first track doesn't already cover. The tempo and time signature are the same throughout, standard 4/4, and the syncopations used here are the same as the other track. You should become familiar with syncopation because I don't see another way to help you wrap your head around what's happening in the rhythms. In other words, you're not going to get really far at all analyzing Ikaruga, or most interesting music for that matter. https://en.wikipedia.org/wiki/Syncopation https://www.dropbox.com/s/b3i23bwa021nlhy/2019-08-14_10-00-26.mp4?dl=0 I've recorded a crude video showing the basic thing happening in the Ikaruga tracks. My right hand is just playing basic 8th notes in 4/4. The left hand plays the 1st 8th note and the 4th 8th note. If you draw it out it looks like this: The top is the 4 beats in 4/4. The middle is the eight 8th notes I was playing. The bottom is the main driving beat that happens in the song, showing up on the down of beat 1 and the up of beat 2. Or in other words, right on the first beat, and halfway between the second and third beat.

-

HoboKa reacted to a post in a topic:

Soundtrack Analysis: what is going on in Ikaruga (Gamecube)?

HoboKa reacted to a post in a topic:

Soundtrack Analysis: what is going on in Ikaruga (Gamecube)?

-

I think you're way overthinking it. The song is in a basic 4/4, same tempo (somewhere 145-150 BPM) the whole way through. It's not really do anything special either, just some syncopation. The "melody beings on the 15th of the previous measure" is just called a pickup note. I would be stunned if you told me you've never heard a melody do that before.

-

Nabeel Ansari changed their profile photo

-

Which version to get - Fruity vs. Producer

Nabeel Ansari replied to Turtle's topic in Music Composition & Production

That answer was from 8 years ago. -

Nabeel Ansari reacted to a post in a topic:

DAW based on sheet music?

Nabeel Ansari reacted to a post in a topic:

DAW based on sheet music?

-

DAW based on sheet music?

Nabeel Ansari replied to JohnStacy's topic in Music Composition & Production

Holy shit what? I mean even just on the notation front it looks way less cluttered than traditional software. Thanks for the mention. EDIT: Online research suggests it's very unstable and crashes a lot. -

DAW based on sheet music?

Nabeel Ansari replied to JohnStacy's topic in Music Composition & Production

Additionally, it's worth mentioning that software combos like Notion and Studio One let you write in notation, and then import directly to DAW for mockup and mixing. It's not an all-in-one solution, but if you want a way to smooth productivity from traditional composition methods into the production phase, that would be the way to go. -

Malcos reacted to a post in a topic:

OCR Cribs (the "Post Pics of your Studio Area" thread!)

Malcos reacted to a post in a topic:

OCR Cribs (the "Post Pics of your Studio Area" thread!)

-

DAW based on sheet music?

Nabeel Ansari replied to JohnStacy's topic in Music Composition & Production

I think notation input in Logic and REAPER stuff is the best you're gonna get. -

What the Heck is that chord?

Nabeel Ansari replied to WiFiSunset's topic in Music Composition & Production

It's two half steps next to each other. -

Nabeel Ansari reacted to a post in a topic:

Having Trouble with FLStudio

Nabeel Ansari reacted to a post in a topic:

Having Trouble with FLStudio

-

Master Mi reacted to a post in a topic:

Faithful high definition studio headphones with flat frequency response

Master Mi reacted to a post in a topic:

Faithful high definition studio headphones with flat frequency response

-

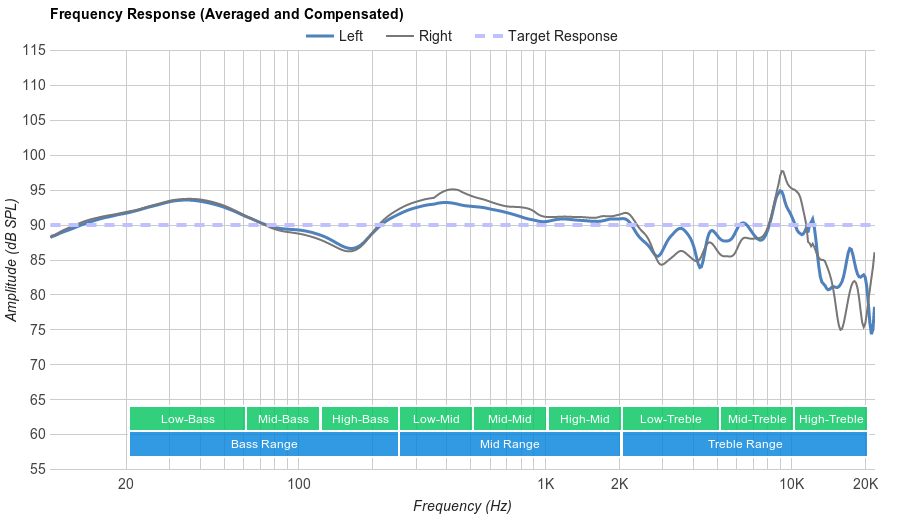

If you're only using the headphones in your studio, buy the most comfortable pair and hit it with Sonarworks for the most ideal headphone response possible. If you plan to use the headphones elsewhere, obviously Sonarworks can't follow you around, I'd say the best out of what you provided is the K-702 just based on the chart. However, based on testimonial of friends, ubiquity, and an even better chart, I'd say you should probably go with the Sennheiser HD 280. This is the 280 i pulled off of google: I have used the DT 880 for a long time, but to be perfectly honest, it's just as bad as the frequency response graph tells; it has incredibly shrill spikes in the treble range. It's honestly an eye-opener when you switch between a flat response and the DT880's (which I have, I use DT 880's + Sonarworks) just how bad the DT 880's actually sound. When compared A/B in that fashion, it honestly does sound like the audio is coming out of a phone speaker when you hear the DT 880's natural sound. Of course, it doesn't matter too much at the end of the day. Headphone responses are easy to get used to and compensate for because they have very broad features (unlike a bad room, where you can get a random 9 dB spike at 130 Hz and nowhere else due to room geometry). What really matters is that most of the frequency range is represented adequately, and that they're comfortable to wear. Every other consideration can be appeased by practice and experience. You're never going to get truly good bass response on headphones (unless you have Nuraphones) and you're never going to get good stereo imaging without putting any crosstalk simulation on your master chain. Unlike studio monitors, there's a pretty low ceiling to how good headphones can sound, and if you're in the $100-150 range, any of the popular ones will do once you get some experience on them.

-

djpretzel reacted to a post in a topic:

ProjectSAM Orchestral Essentials 1 or 2?

djpretzel reacted to a post in a topic:

ProjectSAM Orchestral Essentials 1 or 2?

-

timaeus222 reacted to a post in a topic:

ProjectSAM Orchestral Essentials 1 or 2?

timaeus222 reacted to a post in a topic:

ProjectSAM Orchestral Essentials 1 or 2?

-

ProjectSAM Orchestral Essentials 1 or 2?

Nabeel Ansari replied to a topic in Music Composition & Production

With SSD's, the RAM usage of patches decreases by lowering the DFD buffer setting in Kontakt. A typical orchestral patch for me is around 75-125 MB or so. Additionally, one can save a ton of RAM by unloading all mic positions besides the close mic in any patches and using reverb processing in the DAW instead. I recommend this workflow anyway regardless of what era of orchestral library is being used because it just leads to much better mixes and allows for blending libraries from different developers. -

That'd be true if human loudness perception was linear and frequency-invariant; it is neither (hence the existence of the db scale and the fletcher munson curves). If you're listening on a colored system which has any dramatic deficiencies, those ranges that have deficiencies will have a worse perception of the comparative difference between the source and the chosen monitor. It's the same reason you can not just "compensate" if your headphones lack bass. If the bass is way too quiet, you literally are worse off to tell the difference between +/- 3 dB in the signal compared to if it were at the proper loudness coming out of the headphones, and this is terrible for mixing (why do you think you're supposed to mix at a constant monitoring level?). Only through lots of experience can you compensate that level of nuance with at such reduced monitoring level, like Zircon can do with his DT 880's; his sub and low end is freaking monstrous but controlled, just the right amount of everything, but he had to learn how to do that over years and years of working with the exact same pair of headphones. Again, it's the worst way to judge anything. For example, I don't really agree with half of the assessments you made on those speakers listening on this end. The HS7 is actually the worst sounding speaker in this video, as it sounds like it was run through a bandpass. There's no life in any of the transients. Taking the HS7 as a close reference would not be a "fairly good decision" on your part, it would be pretty bad. Also, note the disclaimer at the end where he said "these speaker sounds contain room acoustics".

-

SnappleMan reacted to a post in a topic:

Faithful studio monitor speakers with flat frequency response and truthful high definition sound

SnappleMan reacted to a post in a topic:

Faithful studio monitor speakers with flat frequency response and truthful high definition sound

-

I want to build you a computer

Nabeel Ansari replied to prophetik music's topic in General Discussion

My message to Brad earlier this week. -

So I should amend my statement to be more technically accurate: Sonarworks can not remove reflections from the room, they are still bouncing around, and no amount of DSP can just stop them from propagating. However, the effect is "cancelled" at the exact measured listening position. Sonarworks is an FIR approach, which is another name for convolution style filtering. Deconvolving reflections is totally and absolutely in the wheelhouse of FIR filtering, as reverb is "linear" and "time-invariant" at a fixed listening position, (relatively) fixed monitoring level and fixed positions of objects and materials in the room. So it absolutely necessitates re-running calibration process if you change stuff around in the room, change the gain structure of your system output, etc I can't comment on standing waves but it seems in their paper they noted that it wasn't covered by the filter approach and so they recommended treatment for that. Just from my peanut gallery background in studying EE I think it makes sense that standing waves aren't linear and time invariant and so trying to reverse them through a filter wouldn't go well. Same goes for nulls, if a band is just dying at your sitting position, trying to reverse that via filter is just not smart at all. Regardless, if you were to move your head or walk around, you would again clearly notice how horrible the room sounds (though the fixed general frequency response is still an improvement), because now you've violated the "math assumption", introducing sound difference created by changing your spatial position. This is the disadvantage of relying on DSP calibration (along with latency and slight pre-ring for the linear phase) and is a compelling reason why you wouldn't want to choose it over proper acoustic design in a more commercial/professional studio design (you don't want crap sound for the people sitting next to you in a session!). I think it's a pretty decent trade for home producers and produces much better results than trying to put cheap speakers in a minimally treated room and still having to learn how to compensate for issues. I just see it as more expensive and time-consuming. Compensating isn't fun; its easy on headphones where problems are usually broad, general tonal shifts in frequency ranges. But in a room, and this is shown in the measurement curve, the differences are not broad and predictable, they're pretty random and localized in small bands. In my opinion it's difficult to really build a mental compensation map unless you listen to a metric ton of different sounding music in your room. It is traditional to learn your setup, but I think the tech is there to make the process way simpler nowadays. To be scientifically thorough, I would love to run a measurement test and show the "after" curve of my setup, however sadly I don't think it's really possible, because SW has a stingy requirement that for measurement the I/O for the computer has to be running on the same audio interface and the calibrated system output is a different virtual out, so there's no way I could run the existing calibration and then also measure that in series. All I can do is volunteer my personal anecdotal experience at how it has improved the sound. I'm not trying to literally sell it to you guys, and no, I don't get kickback, I just think it's one of the best investments people should make into their audio before saving up to buy expensive plugins or anything else. Especially because its results are relatively transferrable to any new environment without spending any more money no matter how many times you move, where room treatments would have to be re-done and maybe more money spent depending on the circumstance. And because of the topic of this thread, it shouldn't be understated that SW calibration can drastically improve the viability of using cheaper sound systems to do professional audio work. I've run calibration at my friend's house with incredibly shitty, tiny $100 M-Audio speakers, in just about the worst way to possibly place/orient them, and I'd say the end result really was within the ballpark of sound quality I get at my home room with more expensive monitors and a more symmetrical set up. It wasn't the same, but it was a lot more accurate (sans any decent sub response) than you could roll your eyes at. Stereo field fixing is dope too. @Master Mi I'm not sure what's to be accomplished by linking YouTube videos of the sound of other monitors. They're all being colored by whatever you're watching the YouTube video on. At best, a "flat response" speaker will sound as bad as the speakers you're using to watch the video, and furthermore, a speaker set that has opposite problems that yours do will sound flat, when they aren't flat at all. Listening to recordings of other sound systems is just about the worst possible way to tell what they sound like.